Real-time motion planning

Motion planning can be defined as the problem of finding a motion, between two rest robot configurations, that does not collide with any obstacle in the environment.

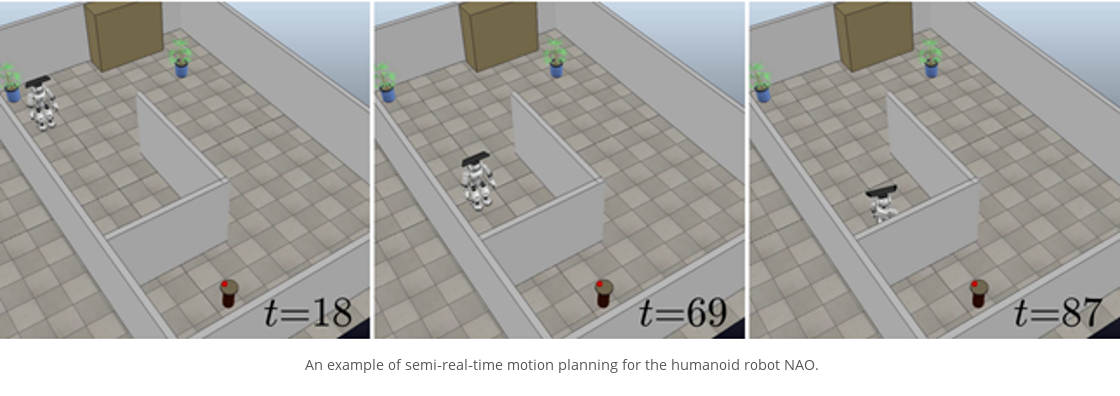

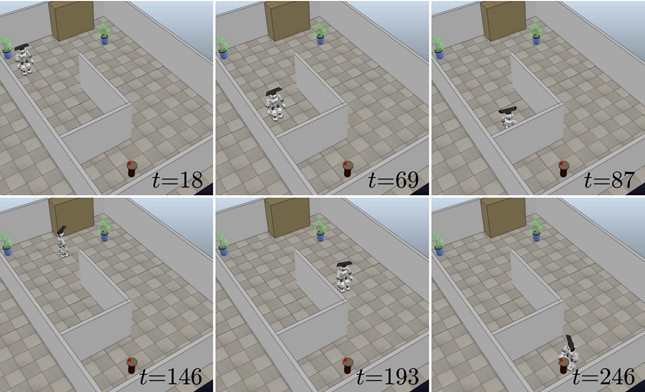

Most of the planning algorithms in the literature (e.g., [1], [2], [3]) works in an offline fashion, meaning that the planner computes the motion of the robot within a static environment, before the robot starts moving. This is obviously a limitation in case there are dynamic objects (e.g., humans) or the robot does not know a-priori the environmental map. For these reasons, the aim of this research is to develop real-time motion planning algorithms that incrementally build a (camera-based) environmental map and continuously generate the robot motion within this map. We already proposed some solutions [4], [5] for humanoid robots. However, these solutions are not purely real-time since they alternate planning and execution phases (see [4], [5] for more details). The idea of this research line is to advance this topic and propose real-time planners.

Solving the motion planning problem in real-time would have a huge impact in robotics: From human-robot collaboration/interaction to autonomous fulfilment of tasks, there are plenty of applications what will benefit from it (e.g., industry, agriculture, urban search-and-rescue).

We are also interested in combining motion planning with reinforcement learning techniques, in order to facilitate the planner to find a solution to the motion planning problem thanks to the memory of previously explored situations given by reinforcement learning.

Fig 1: An example of semi-real-time motion planning for the humanoid robot NAO. The robot has a Kinect camera on its head that is used for building an environmental map. The robot does not have any information about the environment and it plans its motion with the aim of grasping the red ball on the table. While walking, the camera on the robot head detects the wall and the robot replans its motion in order to fulfill the task.

Contact

If you have any questions please contact [email protected]

References

[1] M. Cognetti, P. Mohammadi, G. Oriolo, and M. Vendittelli, “Task-oriented whole-body planning for humanoids based on hybrid motion generation,” in 2014 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, 2014, pp. 4071–4076 (pdf) (video)

[2] M. Cognetti, P. Mohammadi, and G. Oriolo, “Whole-body motion planning for humanoids based on com movement primitives,” in 2015 15th IEEE-RAS Int. Conf. on Humanoid Robots, 2015, pp. 1090–1095 (pdf) (video)

[3] M. Cognetti, V. Fioretti, and G. Oriolo, “Whole-body planning for humanoids along deformable tasks,” in 2016 IEEE Int. Conf. on Robotics and Automation, May 2016, pp. 1615–1620 (pdf) (video)

[4] P. Ferrari, M. Cognetti, and G. Oriolo, “Anytime whole-body planning/replanning for humanoid robots,” in 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Nov 2018, pp. 1–9 (pdf) (video)

[5] P. Ferrari, M. Cognetti, and G. Oriolo, “Sensor-based whole-body planning/replanning for humanoid robots,” in 2019 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), 2019, pp. 511–517 (pdf) (video)